Reinforcement Learning for Quadruped

Weaving poles using a Unitree Go1

Project: Quadruped Reinforcement Learning for Dynamic Weaving on Unitree Go 1

Description:

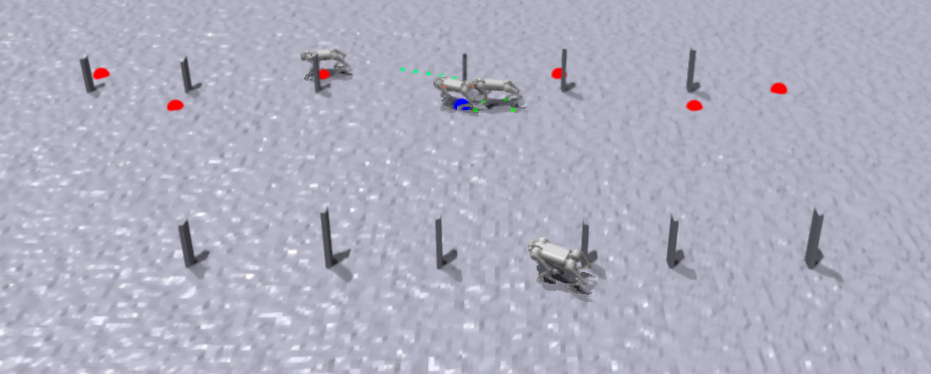

The objective of this project is to replicate the intricate weaving motion performed by animals around poles using reinforcement learning with a quadruped. Inspired by one of the most common tricks exhibited by animals, I aim to emulate this movement pattern with precision and agility. To achieve this, I’ve chosen to utilize reinforcement learning within the Nvidia IsaacGym environment, paired with the versatile Unitree Go1 quadruped. Through this setup, I seek to unravel the complexities of animal-like locomotion and push the boundaries of what’s achievable in robotic motion control.

Training:

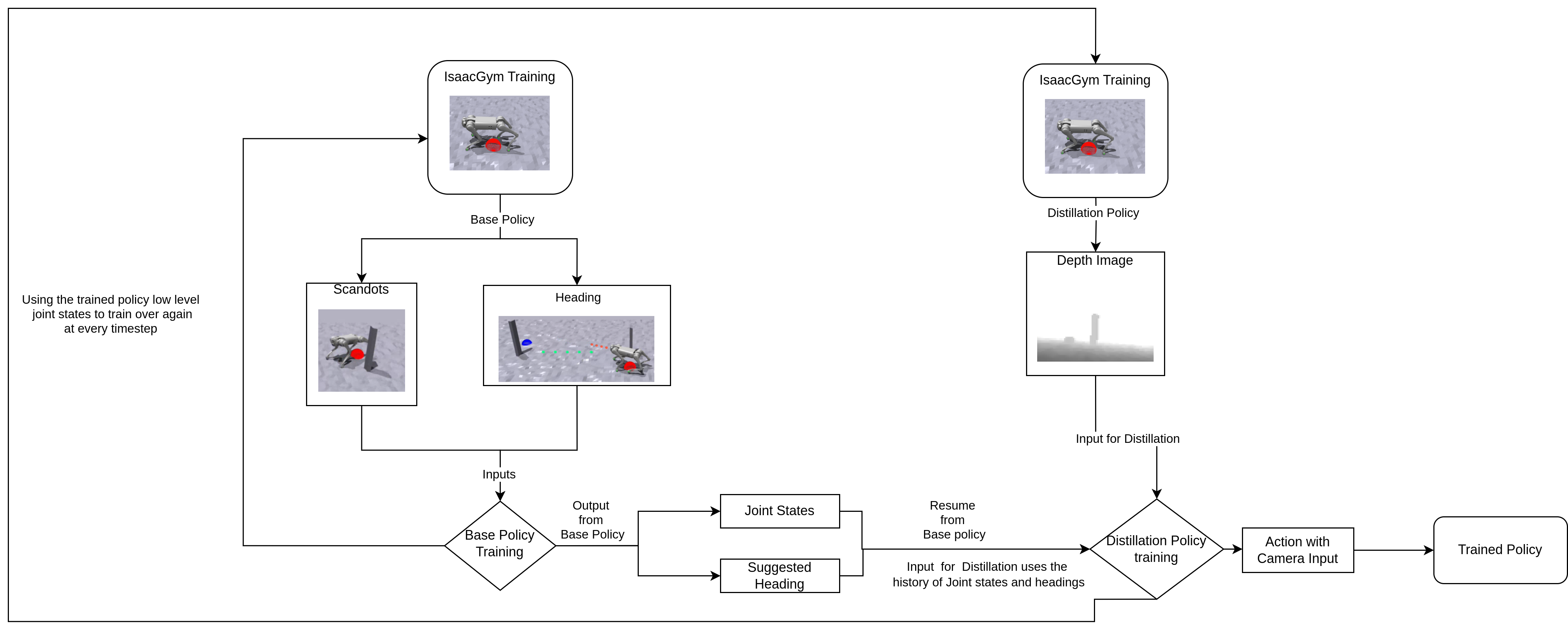

The training is a two step process and they are as follows:

-

Base-Policy

The base policy is trained within the simulation environment using all available data, particularly focusing on goal positions and headings. Scan-dot positions serve as primary objectives, with rewards granted upon successful attainment by the quadruped. However, collisions and exceeding joint/torque limits result in penalties within the simulation, driving the training process to refine joint states for optimal task performance.

-

Distillation Policy

The second step involves training the Distillation policy, which is constructed as a layer atop the base policy. In this phase, joint states trained by the base policy are stored in history and utilized for further refinement. Unlike the base policy, the Distillation policy relies solely on camera input, without access to privileged knowledge such as scan-dots and heading. Through this setup, the policy aims to determine the optimal heading relative to obstacles using joint states and camera data.

The quadruped receives consistent rewards and penalties to ensure the attainment of a robust output. Once trained, this policy is saved as a pytorch-jit model for seamless deployment onto the real robot.

The training flowchart is as follows:

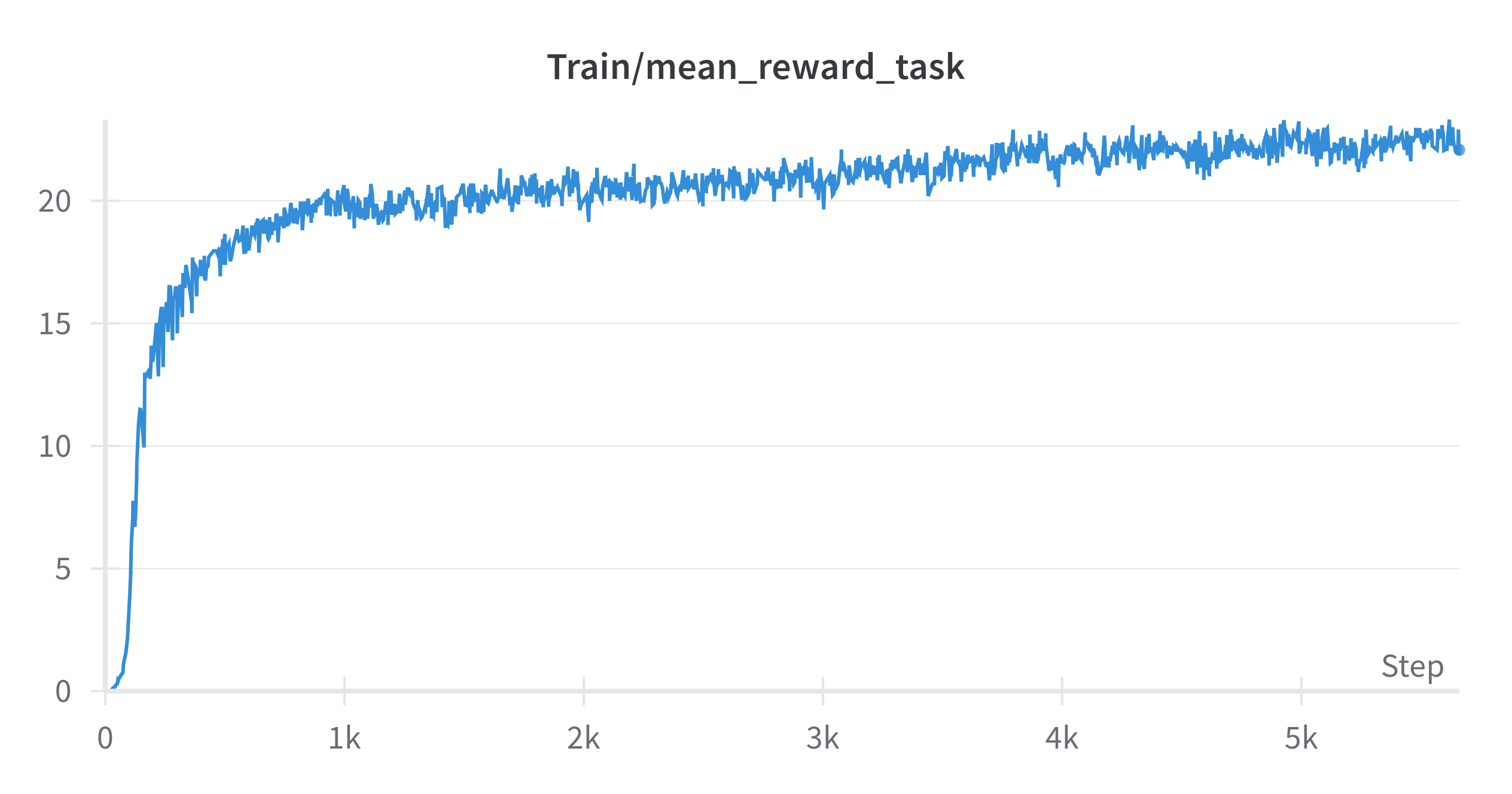

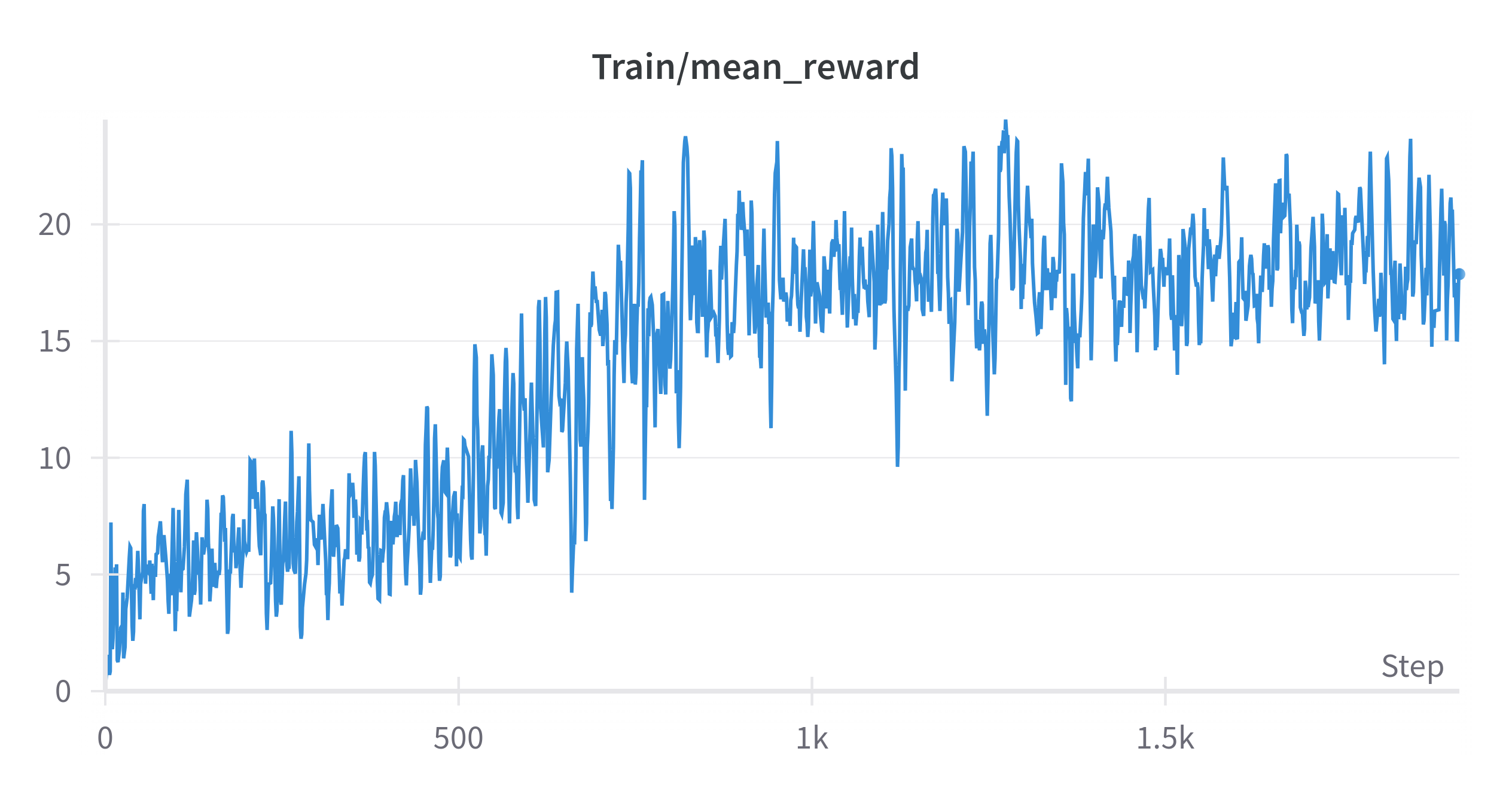

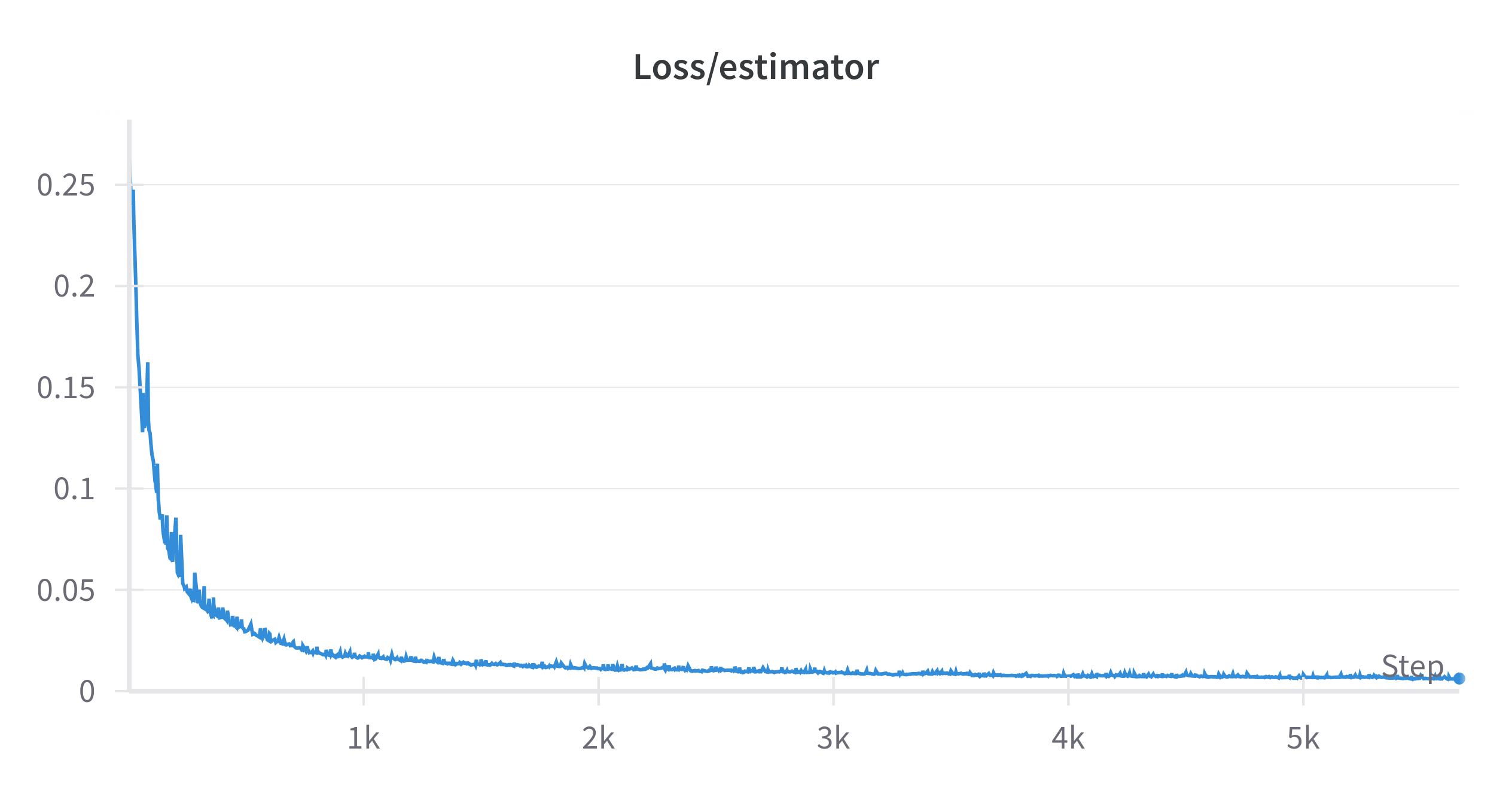

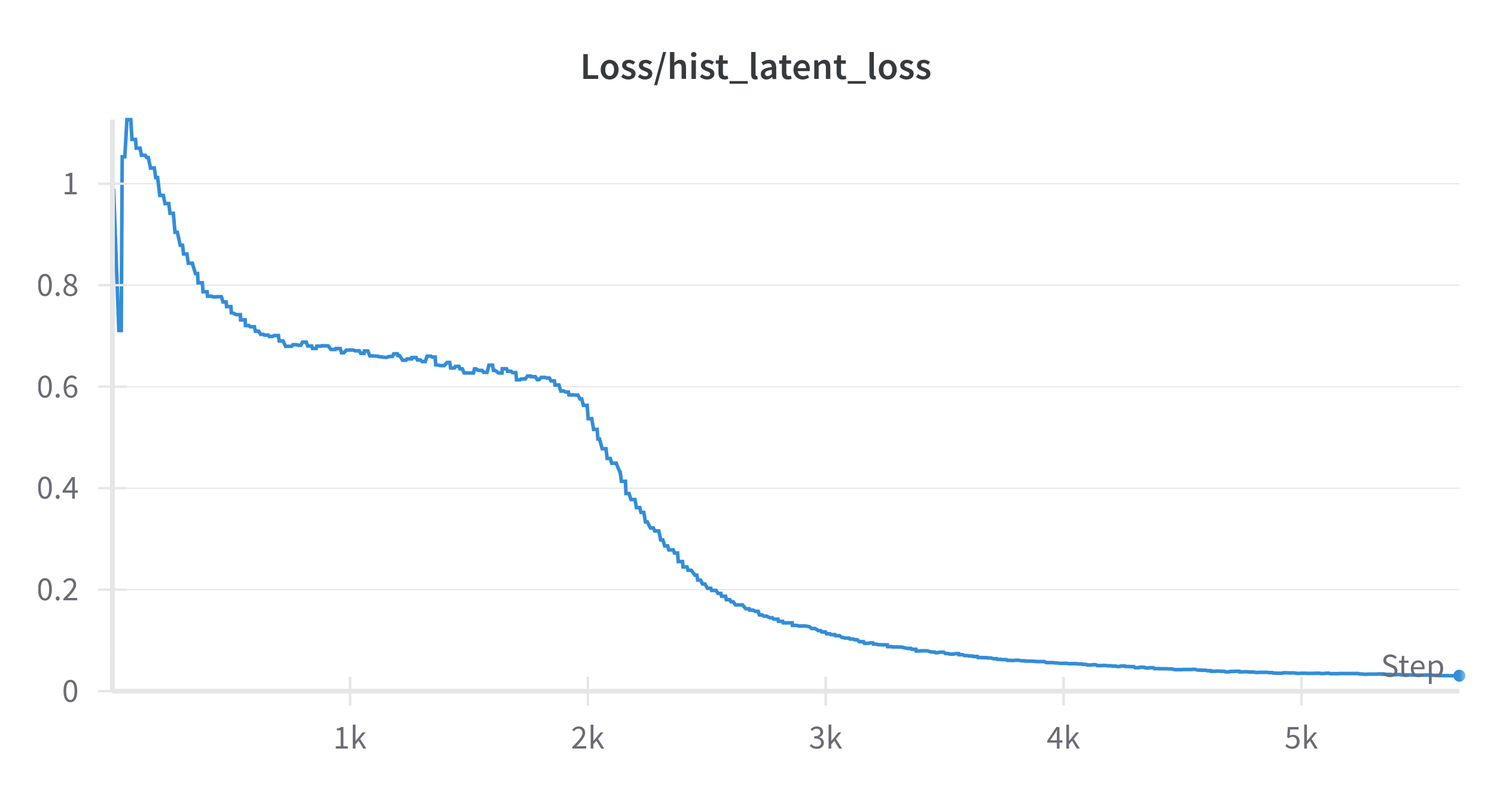

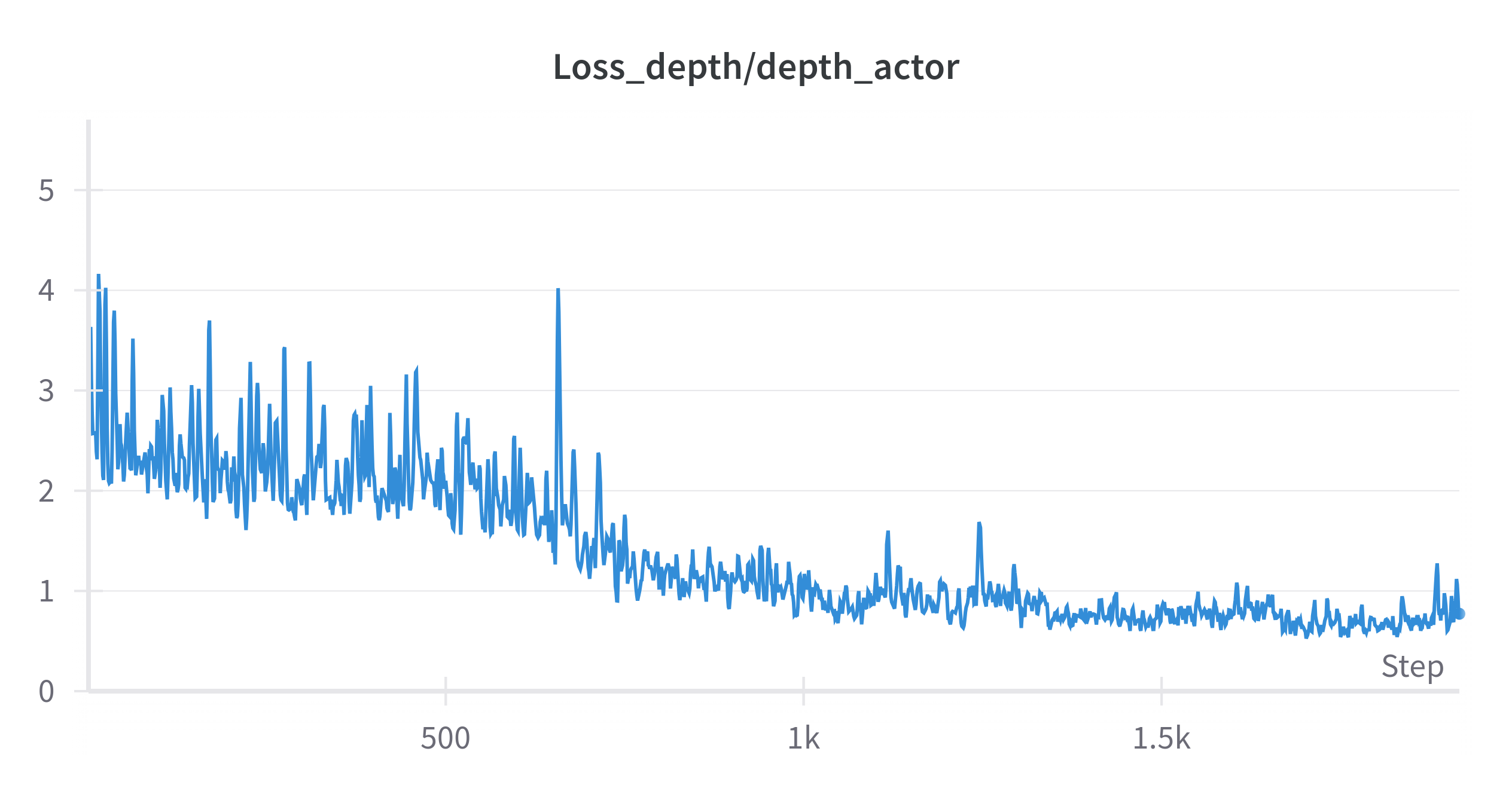

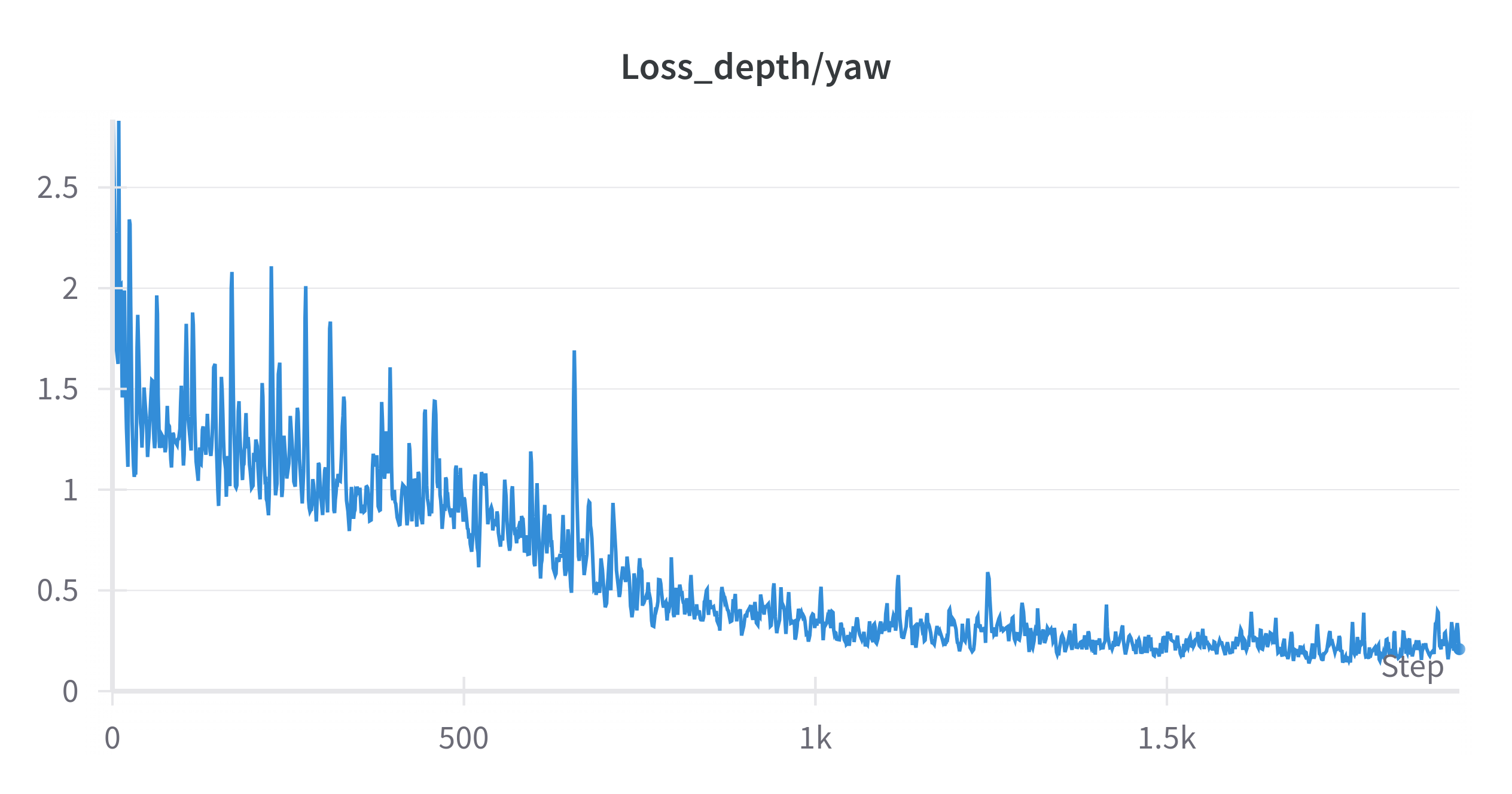

During training, meticulous attention is paid to the loss graphs to monitor the policy’s progress. When the loss converges, indicating satisfactory training, several steps follow. Firstly, the trained policy is tested to ensure the proper functionality of the joints. After successful validation, training may resume for distillation or the policy may be saved for deployment on the real robot. This careful process ensures that the policy undergoes thorough validation before advancing, ensuring reliability and performance in real-world applications.

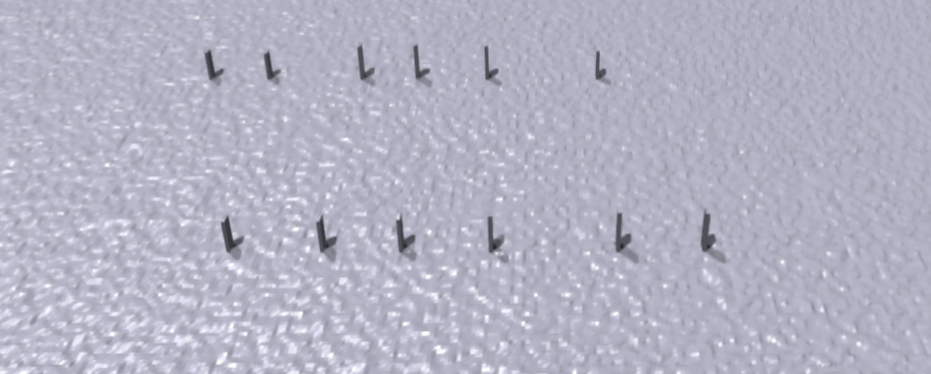

Terrain Manipulation

Creating the gym terrain to simulate the poles for weaving was a crucial step in this project. Multiple environments were meticulously set up to facilitate training, with each environment playing a unique role in the learning process. Training was conducted on a remote machine using Docker, ensuring scalability and efficient resource utilization.

The terrain design is of paramount importance as it directly influences the interaction between the quadruped and its surroundings, thereby shaping the actor training process. By accurately representing the obstacles and challenges that the quadruped encounters, the terrain enables the actor to learn and adapt its behavior accordingly. This emphasis on realistic terrain simulation enhances the training process, leading to more robust and effective policies.

SIM TO REAL

The ongoing effort to transition from simulation to real-life deployment is a critical aspect of this project. Leveraging ROS2 for deployment underscores the commitment to robust and scalable solutions in robotics.

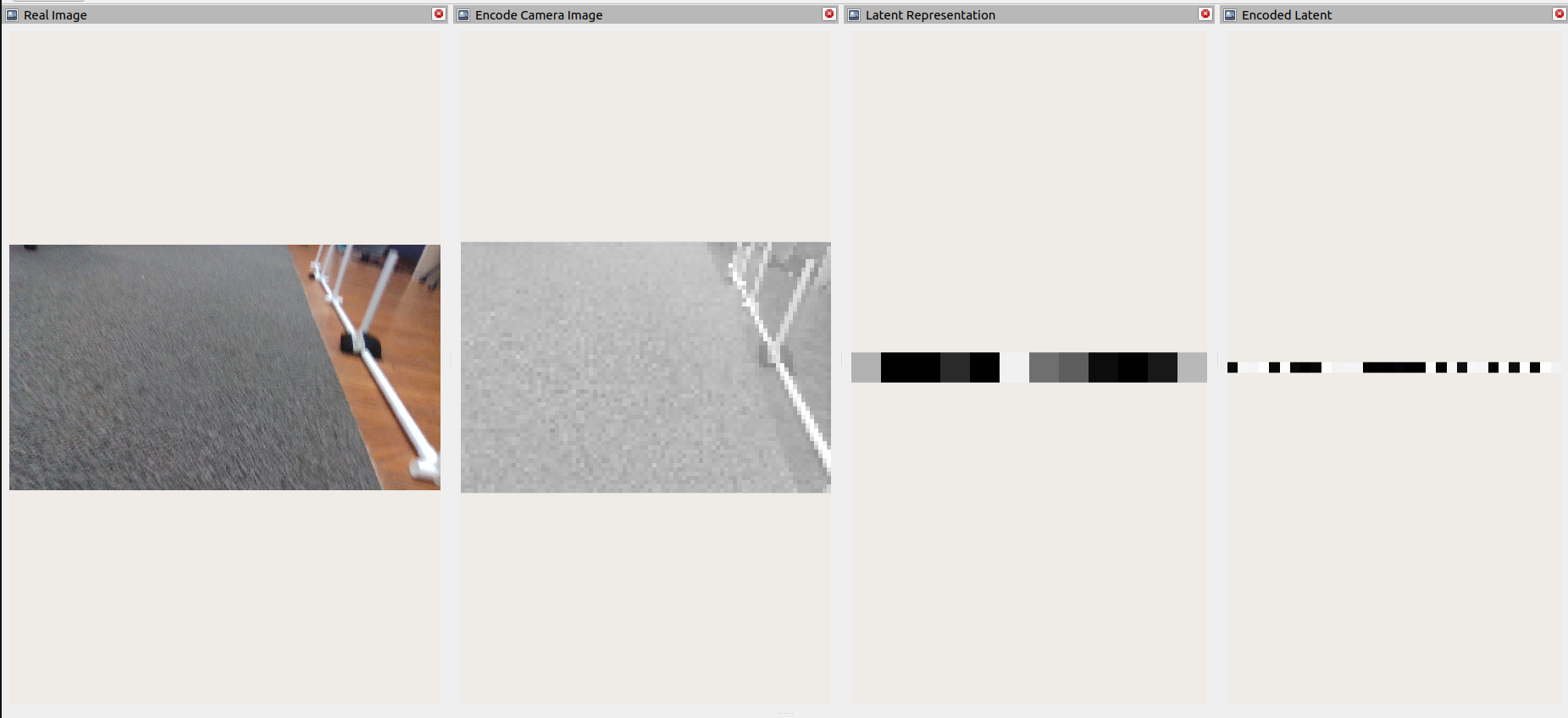

In the simulation phase, two key components are at play: observations and depth space. These elements form the backbone of the sim-to-real pipeline for closed-loop policy deployment. Central to this process is the integration of sensor data, specifically from the RealSense 435di camera.

The journey from raw camera input to actionable data involves several stages of processing. Initially, the raw image undergoes transformation to match the quality observed in simulation, often resulting in a compressed version devoid of multiple color channels.

Subsequently, this modified image is subjected to further compression, ultimately yielding a latent representation of the space captured by the camera. This latent representation is then fed into a neural network model to generate a compact, 1x32 size depth latent representation of the original raw image.

Through these intricate stages of processing and neural network compression, the project aims to bridge the gap between simulation and real-world application, ensuring seamless integration and robust performance in varied environments.

The integration of the latent representation into the trained model, alongside the observation space, serves as a critical input for generating actions, manifested as joint states. This comprehensive approach ensures that the policy developed in simulation can effectively adapt and perform in real-world scenarios.

To facilitate this integration, a dedicated ROS2 package was developed. This package leverages the camera input and applies the compression models to generate the latent representations. By seamlessly interfacing with ROS2, the package enables efficient communication and integration with the broader robotics ecosystem.

For a visual demonstration of the depth policy in action on the quadruped during teleoperation, please find the attached video below.

SIM TO REAL PIPELINE TEST

A sim to real pipeline test of quadruped_locomotion_project package:

This project is highly inspired from the Extreme-Parkour package created by CMU and is built on top of RSL-RL Nvidia Package.

The code can be found at this github link